Al-Driven Decision-Making in the Clinic. Ethical, Legal and Societal Challenges (vALID)

Short description

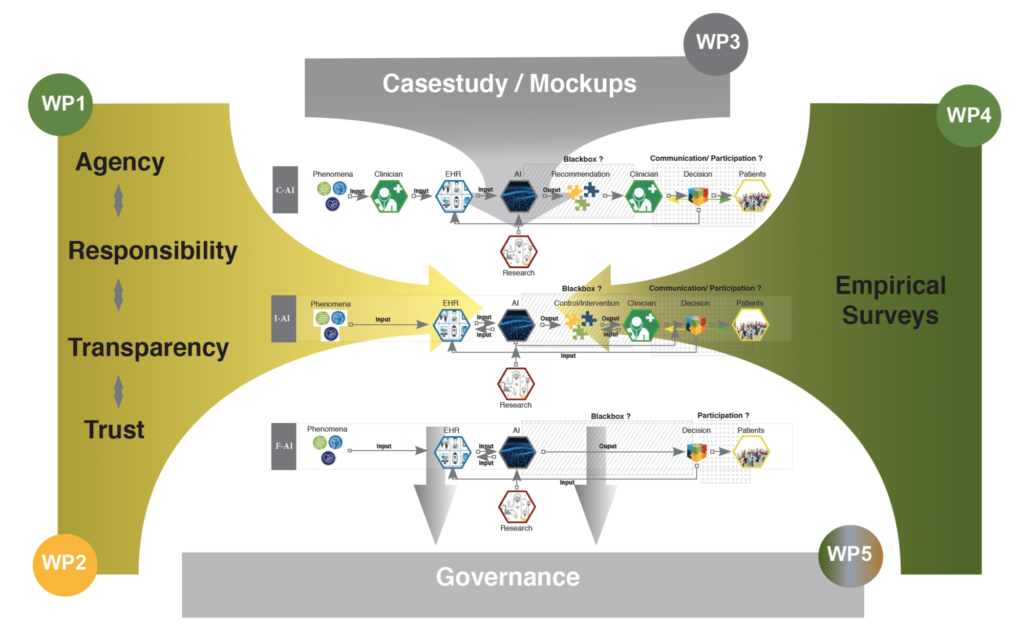

AI is on everyone’s lips. Applications of AI are becoming increasingly relevant in the field of clinical decision-making. While many of the conceivable use cases of clinical AI still lay in the future, others have already begun to shape practice. The project vALID provides a normative, legal, and technical analysis of how AI-driven clinical Decisions Support Systems could be aligned with the ideal of clinician and patient sovereignty. It examines how concepts of trustworthiness, transparency, agency, and responsibility are affected and shifted by clinical AI—both on a theoretical level, and with regards to concrete moral and legal consequences. One of the hypotheses of vALID is that the basic concepts of our normative and bioethical instruments are shifted. Depending on the embedding of the system in decision-making processes, the modes of interaction of the actors involved are transformed. vALID thus investigates the following questions: who or what guides clinical decision-making if AI appears as a new (quasi-)agent on the scene? Who is responsible for errors? What would it mean to make AI-black-boxes transparent to clinicians and patients? How could the ideal of trustworthy AI be attained in the clinic?

The analysis is grounded in an empirical case study, which deploys mock-up simulations of AI-driven Clinical Decision Support Systems to systematically gather attitudes of physicians and patients on variety of designs and use cases. The aim is to develop an interdisciplinary governance perspective on system design, regulation, and implementation of AI-based clinical systems that not only optimize decision-making processes, but also maintain and reinforce the sovereignty of physicians and patients.

Funder: Federal Ministry of Education and Research (BMBF)

Akronym: vALID – Al-Driven Decision-Making in the Clinic. Ethical, Legal and Societal Challenges

Projektzeitraum: 2020–2023

Subprojects

Ethical subproject

The research profile of the Chair of Systematic Theology II (Ethics) at Friedrich-Alexander-Universität Erlangen-Nürnberg is characterized by a fundamental-theological perspective on ethics, and places particular focus on issues in bioethics and social ethics. In line with this emphasis, ethics is conceived of as an academic discipline that uncovers normative questions, analyses their presuppositions and implications, develops criteria for the assessment of situations of moral conflict, and informs policy making. In vALID, the team firstly analyses ethical challenges arising from artificial intelligence in general and in clinical contexts in particular. In a second step, the subproject critically reflects on gaps and aporias in current descriptive heuristics and proposed strategies to navigate these challenges. Finally, in a third, constructive step and in close interaction with the vALID subprojects, the team develops suggestions on how clinical, AI-based decision support systems could be developed and deployed responsibly. One of the hypotheses is that besides the potential to ameliorate clinical processes and decision-making, these applications can advance but also constrain the sovereignty of clinicians and patients.

Legal subproject

The vALID team at the University of Hanover (Chair of Criminal Law, Criminal Procedure Law, Comparative Criminal Law and Philosophy of Law of Prof. Dr. Susanne Beck) is working on addressing legal issues pertaining to artificial intelligence-supported decision-making in clinical settings.

Transparency and a clear allocation of responsibilities play a central role, especially in medical decisions. Only in this way can the doctors’ agency and the patients’ right to self-determination be guaranteed as far as possible. AI’s influence on decision making is changing our current understanding of these concepts. The vALID team plans to first analyse the legal status quo regarding the use of AI in medical decision making. After that, potential solutions will be identified and compared to the solutions discussed in other legal cultures. In close exchange with the project partners, the empirical results will be legally evaluated and the legal evaluation will then be linked to ethical considerations. Our focus will be to elaborate adequate practices of medical decision making in cooperation with AI in such a way that legal responsibility can still be attributed and the patient is involved in a way that preserves his autonomy. One of the most important goals is to collectively develop appropriate guidelines for the creation of new legal regulations for the usage of AI in a medical context.

Technical subproject

Since many years, the Speech and Language Technology Lab of DFKI addresses topics in the biomedical domain, as for instance processing of clinical text to ease the access of information or the prediction of particular events using a combination of structured and unstructured data. Within joint projects together with Charité, multiple technologies and prototypes have been developed. Within the vALID project, DFKI together with its partners will examine future interaction scenarios of AI systems within medical staff and patients, particularly in context of ethical, legal and social aspects. In addition to already existing solutions, which have been developed in other projects, we will create new mock-ups to explore future human-AI interactions scenarios. One of the goals is the development of requirements of future AI and language technologies in medical context, such as explainability or transparency to maximize the decision making sovereignty of medics and data sovereignty of patients.

Empirical subproject

For the third decade, the Digital Nephrology working group at Charité develops and refines an electronic health record of all kidney transplantation patients in Berlin. Based on these high quality data sets and aiming for optimal patient care, implementation of clinical decision support systems (CDSS) into clinical practice is a major goal. While pilot studies are already comparing the performance of AI-algorithms and experienced nephrologists in the prediction of clinically important outcomes, systematic investigations regarding the acceptance of AI-driven systems by physicians and patients, and the ethical and legal consequences involved, are sparse. Within the vALID project, we will deploy different uses cases of CDSS with increasing autonomy and various machine learning explainability techniques. Thereafter, in a study involving physicians working in our outpatient transplantation facility, we will collect data about the chances and risks from the healthcare professional’s and the patient’s perspective, as well as practical, ethical and legal issues, that arise in the context of this very special human-AI-interaction. This could be the starting point for a data-based, interdisciplinary discussion of AI in medicine.

Selected Publikationen

- , , , , , , , , , :

Klinische Entscheidungsfindung mit Künstlicher Intelligenz - Ein interdisziplinärer Governance-Ansatz

Berlin, Heidelberg: Springer, 2023

(Essentials)

ISBN: 9783662670071

DOI: 10.1007/978-3-662-67008-8

- , , , :

A Primer on an Ethics of AI based decision support systems in the clinic

In: Journal of Medical Ethics (2020)

ISSN: 0306-6800

DOI: 10.1136/medethics-2019-105860

- , , :

Own Data? Ethical Reflections on Data Ownership

In: Philosophy & Technology (2020)

ISSN: 2210-5433

DOI: 10.1007/s13347-020-00404-9

URL: https://link.springer.com/article/10.1007/s13347-020-00404-9

- , :

Contact-tracing apps: contested answers to ethical questions

In: Nature 583 (2020), p. 360

ISSN: 0028-0836

DOI: 10.1038/d41586-020-02084-z

URL: https://www.nature.com/articles/d41586-020-02084-z

- , :

Just data? Solidarity and justice in data-driven medicine

In: Life Sciences, Society and Policy 16 (2020), Article No.: 8

ISSN: 2195-7819

DOI: 10.1186/s40504-020-00101-7

URL: https://lsspjournal.biomedcentral.com/articles/10.1186/s40504-020-00101-7

- , , , , , , , , , , , :

Evaluation of a clinical decision support system for detection of patients at risk after kidney transplantation

In: Frontiers in Public Health 10 (2022), Article No.: 979448

ISSN: 2296-2565

DOI: 10.3389/fpubh.2022.979448

- , , , , , , , :

"Nothing works without the doctor:" Physicians' perception of clinical decision-making and artificial intelligence

In: Frontiers in Medicine 9 (2022)

ISSN: 2296-858X

DOI: 10.3389/fmed.2022.1016366

URL: https://www.frontiersin.org/articles/10.3389/fmed.2022.1016366/full

- , :

For the sake of multifacetedness. Why artificial intelligence patient preference prediction systems shouldn’t be for next of kin

In: Journal of Medical Ethics (2023)

ISSN: 0306-6800

DOI: 10.1136/jme-2022-108775

- , , , , , , , , , , :

When performance is not enough-A multidisciplinary view on clinical decision support

In: PLoS ONE 18 (2023), p. e0282619-

ISSN: 1932-6203

DOI: 10.1371/journal.pone.0282619

- , , , , , , :

Perspectives of patients and clinicians on big data and AI in health: a comparative empirical investigation

In: AI and Society (2024)

ISSN: 0951-5666

DOI: 10.1007/s00146-023-01825-8

- , , , :

Towards an Ethics for the Healthcare Metaverse

In: Journal of Metaverse 3 (2023), p. 181-189

ISSN: 2792-0232

DOI: 10.57019/jmv.1318774