Summer School „Artificial Intelligence, Ethics and Human Rights“ (June 20-24, 2022)

Artificial Intelligence, Ethics, Human Rights – and Tapas: Looking back to the EELISA Summer School in Madrid

Artificial Intelligence, Ethics and Human Rights – a trio of buzzwords that at first glance don’t seem to have much to do with each other. When I heard about the Summer School for the first time, I was therefore surprised that two such contradictory topics were coming together. On the one hand, Ethics and Human Rights as the focal points of socio-political science and, on the other, Artificial Intelligence as a technical variable. But I have to admit that I had hardly dealt with Artificial Intelligence before, so it was perhaps not surprising that I did not see an obvious connection. “But why not,” I thought, “you have to try something new.” And so, on 20 June, I and 11 other students went to Madrid for a week to learn more about these three topics and their relationship at a summer school. When the alarm clock went off at half past three in the morning, I had some doubts as to whether something new really always had to be there, but after the initial tiredness was overcome, the anticipation grew.

Artificial Intelligence, Ethics and Human Rights – a trio of buzzwords that at first glance don’t seem to have much to do with each other. When I heard about the Summer School for the first time, I was therefore surprised that two such contradictory topics were coming together. On the one hand, Ethics and Human Rights as the focal points of socio-political science and, on the other, Artificial Intelligence as a technical variable. But I have to admit that I had hardly dealt with Artificial Intelligence before, so it was perhaps not surprising that I did not see an obvious connection. “But why not,” I thought, “you have to try something new.” And so, on 20 June, I and 11 other students went to Madrid for a week to learn more about these three topics and their relationship at a summer school. When the alarm clock went off at half past three in the morning, I had some doubts as to whether something new really always had to be there, but after the initial tiredness was overcome, the anticipation grew.

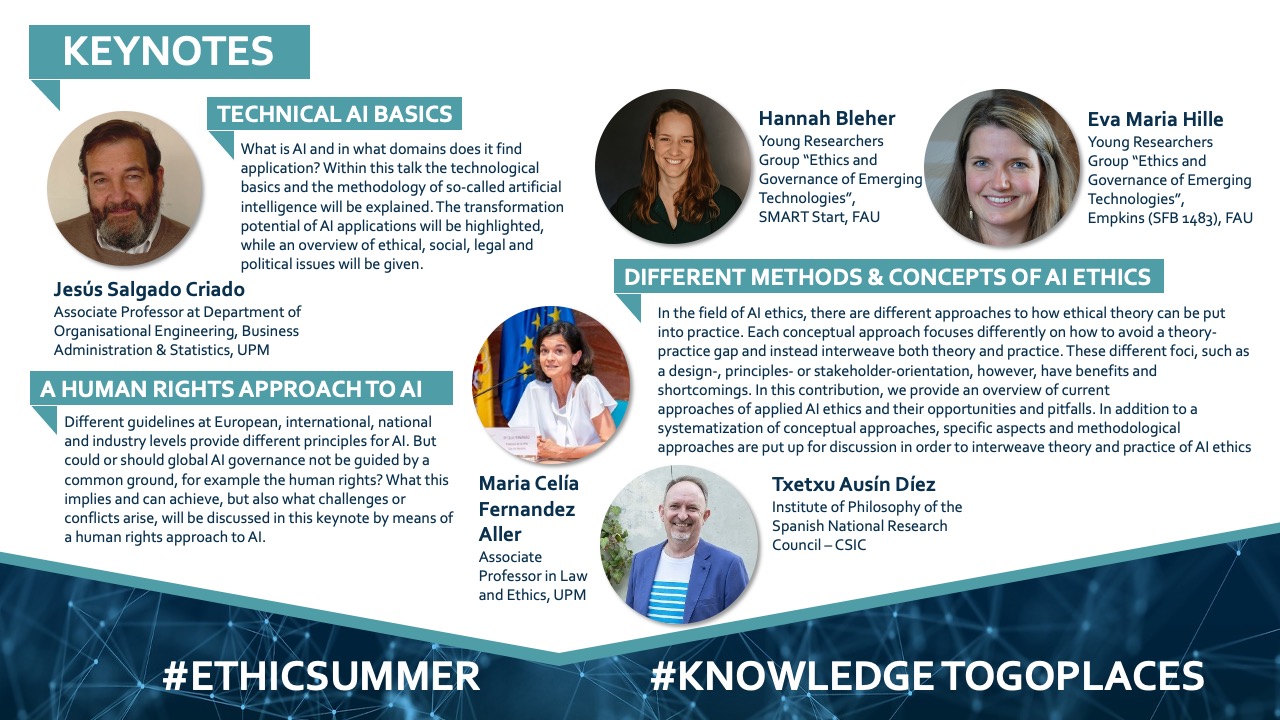

But let’s get back to the three title words. What do they actually mean? Human Rights – well, everyone knows them. They comprise a catalogue of rights that every human being has by virtue of being human. Ethics can be succinctly defined as reflection on morality – so far so catchy. But what is Artificial Intelligence? I’ve already heard about it, but what does it mean? I know Siri, Alexa and Cortana. I know that Netflix sends me recommendations based on my previous preferences. And I’ve watched all the Iron Man movies and seen Tony Stark’s homegrown Artificial Intelligence Jarvis grow from computer to human. But that’s not a real answer to the question. Computer scientist Elaine Rich gives one: she says Artificial Intelligence is research into how computers will be able to do things in the future that humans are better at.[1] In the long run, computers will take over and replace the work of humans. Artificial Intelligence is trained to do this in logical thinking, problem solving and gathering knowledge through data.

When it comes to the question of how Ethics, Human Rights and Artificial Intelligence now fit together, there is a first, simple answer: they are all of the greatest social relevance. But that is not all that connects them. Already on the first evening in Madrid, the close, multi-layered interconnectedness of these three disciplines was made clear to us participants. During a get-to-know-you evening, we were asked to answer three simple questions in small groups: What kind of Artificial Intelligence do we use most often in our everyday lives, what advantages does Artificial Intelligence offer and what disadvantages and dangers? And we were already in the middle of the complexity of the topic. Because Artificial Intelligence can be found virtually everywhere, from algorithms on social media to its use in modern medicine. And suddenly it seemed only logical to me to consider Artificial Intelligence in connection with Ethics and Human Rights. For as pleasant as it is to have content pre-sorted for me on Amazon, Instagram and the like, or how relaxed it is to control all the technical devices in my home simply by app or voice assistant, Artificial Intelligence is also one thing above all: a gigantic data collector and susceptible to so-called biases, i.e. prejudices and the resulting unequal treatment. Because even if the name Artificial Intelligence suggests independence and autonomy, AI is still created by humans. And their attitudes, opinions and world views are therefore all too often also found in their systems.

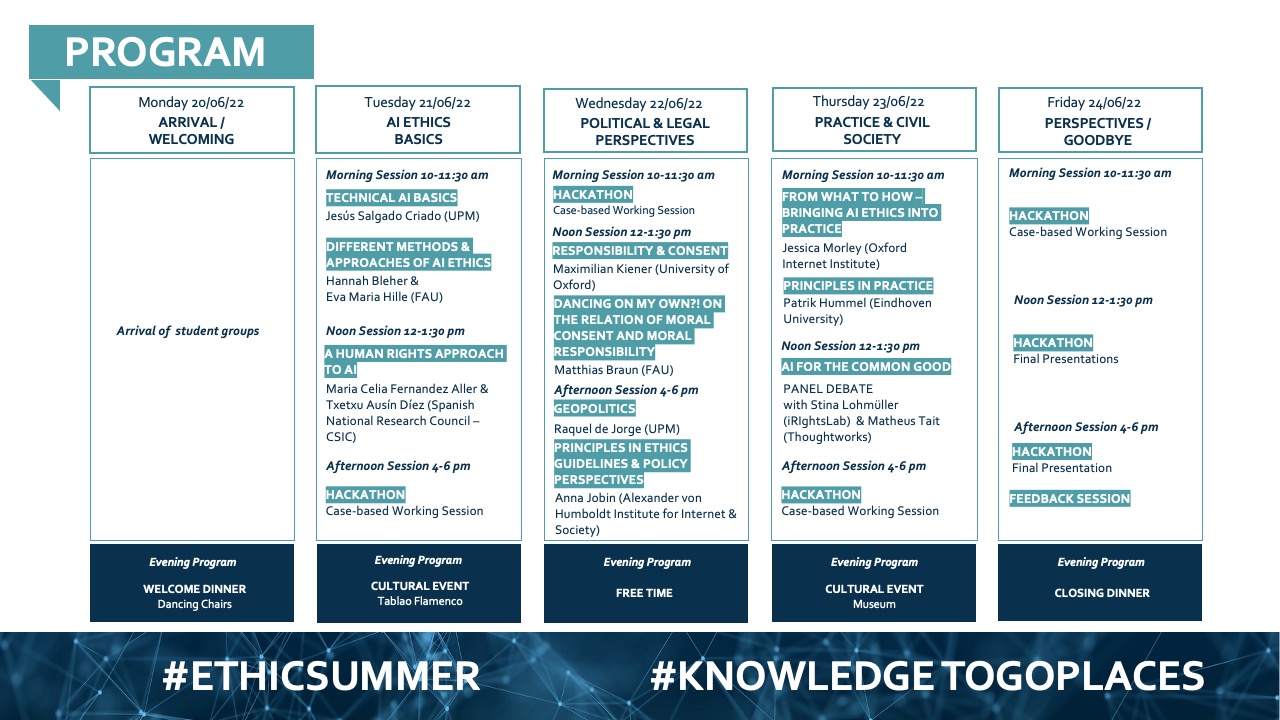

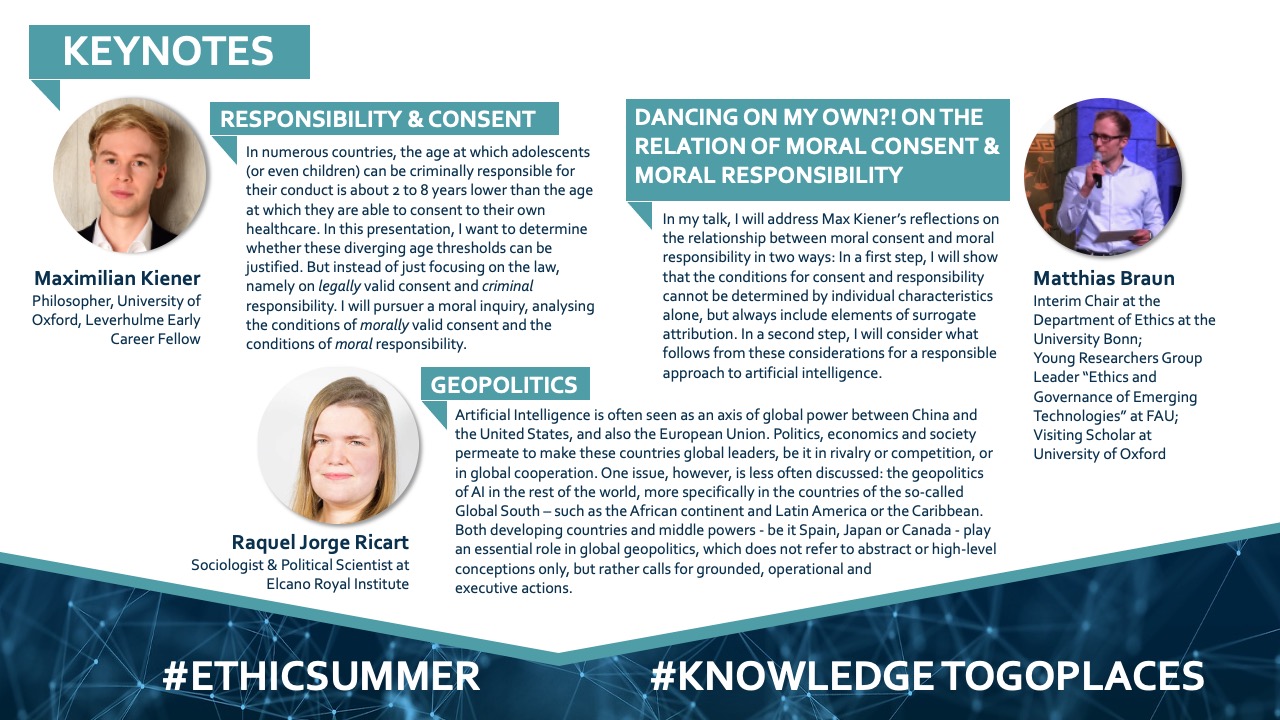

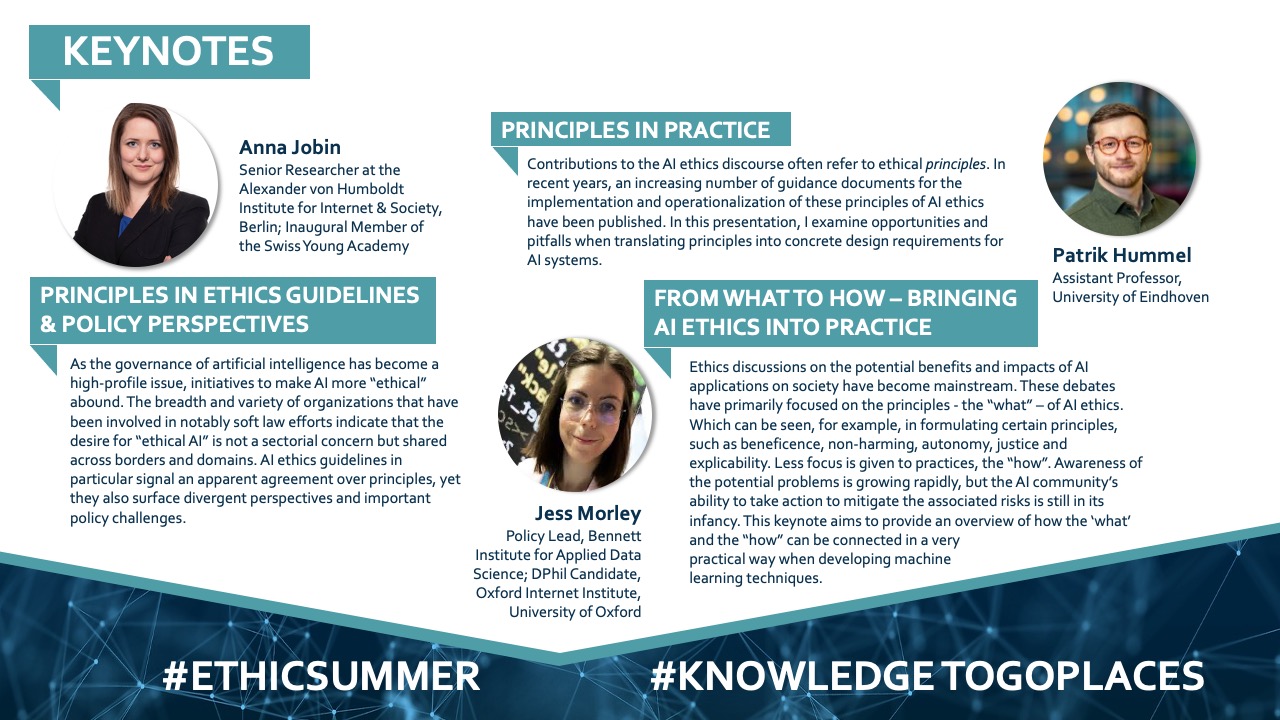

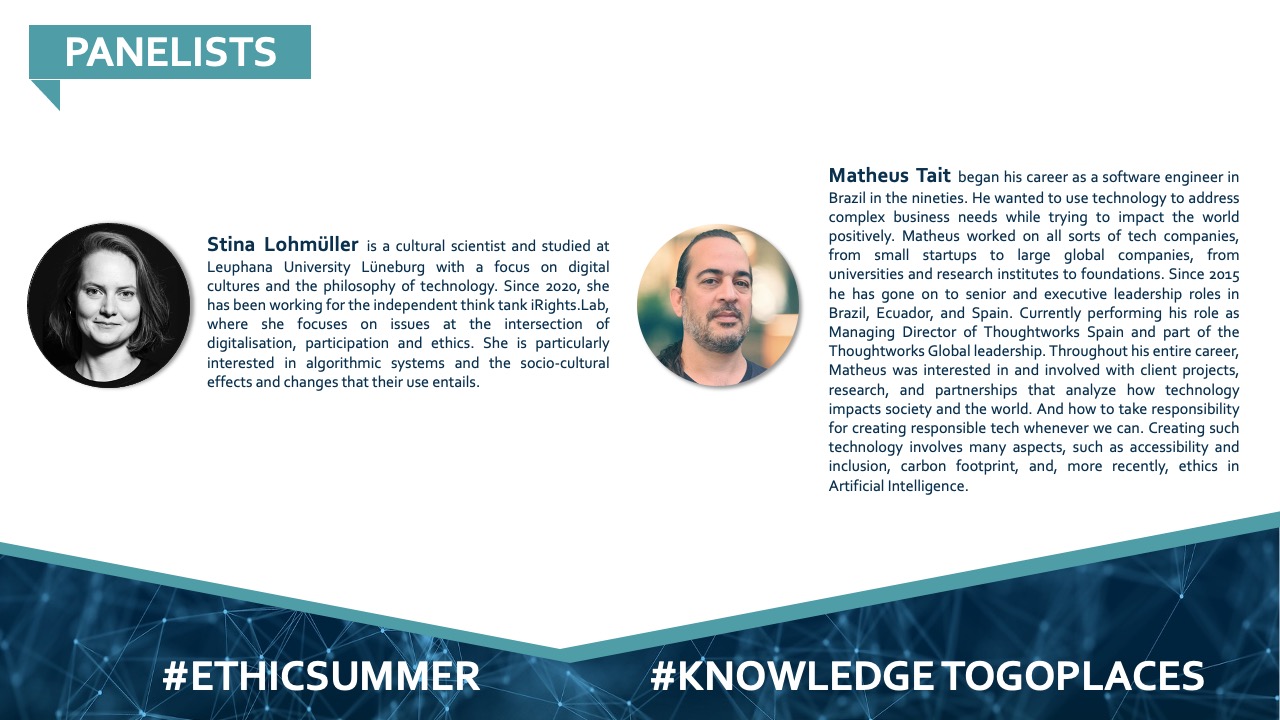

It was precisely these problems that were addressed in various lectures over the following days. The invited speakers themselves came from a wide range of disciplines and had different focuses with regard to AI, Ethics and Human Rights. For example, we talked about flawed AI systems at the state level, such as those used in the Netherlands. There, people with dual citizenship were automatically classified as being at greater risk of benefit and tax fraud than Dutch people with single citizenship. We also gained an insight into the difficulties of implementing ethical guidelines in the use of AI. Since there are no uniform regulations so far, it is up to the individual companies and authorities to set their guidelines.

Insufficient transparency, the accusation of ethics washing and deliberate vagueness in the wording of these documents are just some of the problems. But not only the practical implementation of ethical approaches in connection with AI was the subject of the discussions. Equally important were fundamental concepts, such as the question of responsibility. Who is responsible for securing my data? Who must monitor compliance with ethical guidelines? And how does responsibility shift once I have given my consent, for example in the form of cookie use on a website? As if this were not complicated enough, one must also ask how moral responsibility actually plays a role here. All these questions and approaches show that this topic is a complex structure of theoretical considerations and practical application or implementation. To give a more detailed insight into the findings and discussions of the Summer School, some of these issues are explained in more detail in the videos on this page.

Besides the lectures and the input from outside, we were also allowed to be active ourselves. In small groups, we had to prepare a presentation on the use of an AI-based technology. There was a choice of different focal points. While one group dealt with the use of Predictive Policing by the police, another presented the idea of a Digital Twin, a digital clone for better management and analysis of medical data. The use of AI to detect breast cancer and the use of algorithms to better and more fairly distribute social benefits were also discussed. This group work clearly showed us how difficult it is to implement and carry out a project that uses AI, taking into account ethical and human rights concepts and guidelines. Even the composition of the groups was challenging here, because we students all came from different disciplines: from Ethics, IT, theology, some in their Master’s, some in teaching. With so many different backgrounds, it was often not so easy to find a common starting point in the beginning, as everyone had different priorities and the evaluation of advantages and disadvantages was different. At the same time, this mixture allowed for an intensive exchange, which gave us all new perspectives. This interdisciplinary work can perhaps also be part of the solution, because at the end of the Summer School everyone agreed: only together can a solution be found. AI, Ethics and Human Rights must work closely together to ensure the responsible use of AI in the future. The Summer School was an important step towards this.

But work was not the only focus of the week. There was also a lot of fun to be had. We used our free time to get to know Madrid. In addition to a city tour, the Spanish students also showed us the culinary side of the capital. That meant tapas, tapas and more tapas. And so, in addition to patatas bravas, these convivial evenings also provided a cultural exchange and new friendships that we were able to take back to Germany.

But work was not the only focus of the week. There was also a lot of fun to be had. We used our free time to get to know Madrid. In addition to a city tour, the Spanish students also showed us the culinary side of the capital. That meant tapas, tapas and more tapas. And so, in addition to patatas bravas, these convivial evenings also provided a cultural exchange and new friendships that we were able to take back to Germany.

Finally, I would like to thank my fellow students, Hannah and Eva for the great organisation, the intensive exchange and the wonderful time in Madrid.

By Carima Jekel, who participated in the Summer School as FAU student

[1] Rich, Elaine: Artificial Intelligence and the Humanities. In: Computers and the Humanities, Apr.-Jun., 1985, Vol. 19 No.: Natural Language Processing, S. 117– 122, <https://www.jstor.org/stable/30204398> [25.07.2022].